Visually diagnosed medical tests (e.g. radiographs, electrocardiograms) are the most commonly ordered tests in front-line medicine. As such, front-line health care professionals are faced with the task of learning the skill of interpreting these images to an expert performance level by the time they provide opinions that guide patient management decisions. However, discordant interpretations of these images between front-line physicians and expert counterparts (radiologists, cardiologists) are a common cause of medical error1–9. In pediatrics, this problem is even greater due to the changing physiology with age leading to increased risk of interpretation errors.

Currently, most approaches to learning the interpretation of medical images include case-by-case exposure in clinical settings and tutorials that are either didactic or the passive presentation of cases on-line. However, these strategies have not demonstrated optimal effectiveness in clinical studies that examined the accuracy of front-line physicians in interpreting these images. Furthermore, many of the continuing medical education activities emphasize clinical knowledge, do not provide opportunities for feedback, and require little more than documentation of attendance, which limits the potential for improvement in the practicing physician10.

In an effort to bridge this knowledge-practice gap, we developed a medical education research program which answered a number of important research questions in an effort to make on-line learning effective for the emergency medicine physician11–16.

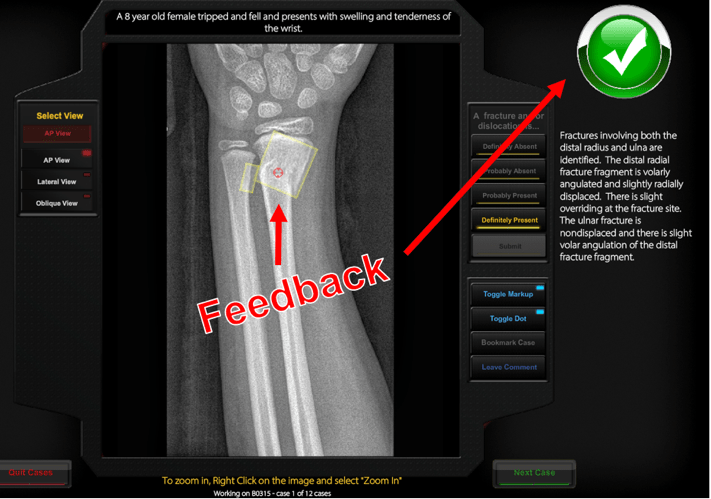

After 11 years of research, we have translated this evidence into a validated medical image interpretation learning system called ImageSim. This is a non-profit 24/7 on-line learning program offered by the Hospital for Sick Children, University of Toronto as residency training and Continued Professional Development course. ImageSim teaches health care professionals the interpretation of visually diagnosed medical tests using the concepts of deliberate practice and cognitive simulation. That is, our learning model includes sustained active practice of hundreds of cases where the learner actively makes the diagnosis for every case and then receives immediate specific feedback on their interpretation so that the participant instantly learns from each case (Figure 1).

Figure 1: After each case is interpreted by the learner, the learning system provides instant text and visual feedback with every case.

This way, we embed assessment for learning rather than the usual approach to assessment, which is assessment of learning. Importantly, we have presented these images as we encounter them in practice, and included a normal to abnormal radiograph ratio (with a spectrum of pathology) reflective of our day-to-day practice. After about 55 cases, the learner will get a running tabulation of performance measured as accuracy, sensitivity, and specificity. Participant performance will be compared to benchmarks for graduating residents (Bronze), emergency physicians (Silver), and specialized experts (Gold). See a demonstration here.

Our research to date shows that this learning method works – and all physicians at varying levels of expertise had significantly increased their accuracy in image interpretation along a “learning curve” as graphed below:

Active courses are available here and currently include pediatric musculoskeletal injuries (7 modules, 200-400 cases per module, 2,100 cases total) and pediatric chest radiographs (450 cases). To be released early in 2018 are pre-pubertal female genital examination (150 cases) and pediatric point of care ultrasound (400 cases).

ImageSim has been accredited for Level 3 CME credits by the Royal College of Physicians and Surgeons and Level 2 CME credits by the College of Family Practice of Canada. Registration information can be found here.

This post was copyedited and uploaded by Kevin Durr.

[bg_faq_start]